Oracle Database 11g Data Guard Configuration on Linux

Basic Information of Machines

1. Primary DB:

Database Name: ORCL

Unique Name: Primary

Machine IP: 192.168.0.1

TNS Service Name: toStandby

2. Standby DB:

Database Name: ORCL

Unique Name: Standby

Machine IP: 192.168.0.2

TNS Service Name: toPrimary

Configuring For Remote Installation

To configure Secure Shell:

1. Create the public and private keys on all nodes:

[PrimaryDB]$ /usr/bin/ssh-keygen -t dsa

[StandbyDB]$ /usr/bin/ssh-keygen -t dsa

2. Concatenate id_dsa.pub for all nodes into the authorized_keys file on the first node:

[PrimaryDB]$ ssh 192.168.0.1 "cat ~/.ssh/id_dsa.pub" >> ~/.ssh/authorized_keys

[PrimaryDB]$ ssh 192.168.0.2 "cat ~/.ssh/id_dsa.pub" >> ~/.ssh/authorized_keys

3. Copy the authorized_keys file to the other nodes:

[PrimaryDB]$ scp ~/.ssh/authorized_keys 192.168.0.2:/home/oracle/.ssh/

4. Copy ~/.ssh folder on all nodes.

CONFIGURATION ON PRIMARY DATABASE

Step # 1.

SQL> Shutdown Immediate;

SQL> Startup Mount;

SQL> Alter Database ArchiveLog;

Step # 2.

Now Create a standby controlfile on the PRIMARY site.

SQL> Alter Database Create Standby Controlfile as ‘/opt/oracle/backup/standcontrol.ctl’;

Now copy this new created standby control file to the standby site where other database file like

datafiles, logfiles and control files are located. Rename this file to Control01.ctl, Control02.ctl, Control03.ctl.

Step # 3.

Create pfile from spfile on the primary database:

SQL> Create pfile from spfile=’/opt/oracle/OraDB11g/dbs/spfileORCL.ora’;

Step # 4.

Then make/add following settings in the initORCL.ora file on the PRIMARY Machine.

db_unique_name=’PRIMARY’

FAL_Client=’toPrimary’

FAL_Server=’toStandby’

Log_archive_config=’DG_CONFIG=(primary,standby)’

Log_archive_dest_1=’Location=/opt/oracle/backup VALID_FOR=(ALL_LOGFILES,ALL_ROLES) db_unique_name=primary’

Log_archive_dest_2=’Service=toStandby lgwr async VALID_FOR = (ONLINE_LOGFILES,PRIMARY_ROLE) db_unique_name=standby’

Log_archive_dest_state_1=ENABLE

Log_archive_dest_state_2=DEFER

Service_names=’primary’

Standby_File_Management=’AUTO’

Step # 5

Create password file using ‘bash’.

$ orapwd file=/opt/oracle/OraDB11g/dbs/orapwORCL password=oracle entries=5 ignorecase=y force=y

Force option is used to replace an existing password file.

Step # 6.

Now startup the PRIMARY database to MOUNT stage.

SQL> Shutdown Immediate;

SQL> Startup Mount;

SQL> Alter Database Force Logging;

Step # 7

On the PRIMARY site, also create standby redo logfile for the Standby database. This Standby redo logfile will be used for Datagurad Observer later on. If you don’t want to use DG broker, then there is no need to created standby redo logfile.

SQL> Alter Database Add Standby Logfile (‘/opt/oracle/oradata/ORCL/StandbyRedo.log’) size 150m;

Step # 8

Now shutdown the primary database

SQL> Shutdown Immediate;

Copy all the datafiles and standby redo logfile from PRIMARY site to the same location on the STANDBY site. Then again startup the PRIMARY database to mount stage.

Step # 9

Now shutdown the primary database

SQL> Shutdown Immediate;

Create spFile from pFile.

SQL> Create spfile from pfile;

Restart the Primary database.

Step # 10

SQL> Startup;

Step # 11

Now on PRIMARY site create a service in TNSnames.ora file through which the PRIMARY site will be connected to Standby machine.

TOSTANDBY =

(DESCRIPTION =

(ADDRESS_LIST =

(ADDRESS = (PROTOCAL = TCP) (HOST = 192.168.0.2) (PORT = 1521))

)

(CONNECT_DATA =

(SERVICE_NAME = Standby)

)

)

Step # 12

Register the Primary Database in the Listener.ora file. Then stop and start the listener.

> lsnrctl stop

> lsnrctl start

Step # 13

Query the DATABASE_ROLE column from V$DATABASE to new the role of Primary database. It should return ‘PRIMARY’.

SQL> select database_role from v$database;

DATABASE_ROLE

-------------------------

PRIMARY

Step # 14

Also check the connectivity from the SQL prompt.

SQL> connect sys/oracle@toStandby as sysdba;

Connected.

Service can also be created through Net Manager utility available with Oracle Server. Connectivity can also be checked there.

CONFIGURATION ON STANDBY DATABASE

Step # 1

Check the mode of archiving by following command:

SQL> Archive Log List;

Step # 2

Then create pfile from spfile on the standby database:

SQL> Create pfile from spfile=’/opt/oracle/OraDB11g/dbs/spfileStandby.ora’;

Step # 3

Then make/add following settings in the initORCL.ora file on the STANDBY Machine.

db_unique_name=’STANDBY’

FAL_Client=’toStandby’

FAL_Server=’toPrimary’

Log_archive_config=’DG_CONFIG=(primary,standby)’

Log_archive_dest_1=’Location=/opt/oracle/backup VALID_FOR=(ALL_LOGFILES,ALL_ROLES) db_unique_name=standby’

Log_archive_dest_2=’Service=toprimary VALID_FOR=(ONLINE_LOGFILES,PRIMARY_ROLE) db_unique_name=primary’

Log_archive_dest_state_1=ENABLE

Log_archive_dest_state_2=ENABLE

Service_names=’standby’

Standby_File_Management=’AUTO’

Step # 4

Create password file using ‘bash’.

$ orapwd file=/opt/oracle/OraDB11g/dbs/orapwORCL password=oracle entries=5 ignorecase=y force=y

Force option is used to replace an existing password file.

Step # 5

Now on STANDBY site create a service in TNSnames.ora file through which the STANDBY site will be connected to PRIMARY machine.

TOPRIMARY =

(DESCRIPTION =

(ADDRESS_LIST =

(ADDRESS = (PROTOCAL = TCP) (HOST = 192.168.0.1) (PORT = 1521))

)

(CONNECT_DATA =

(SERVICE_NAME = primary)

)

)

Step # 6

Check the connectivity from the SQL prompt.

SQL> Connect sys/oracle@toPrimary as sysdba;

Connected.

Service can also be created through Net Manager utility available with Oracle Server. Connectivity can also be checked there.

Step # 7

Register the Primary Database in the Listener.ora file. Then stop and start the listener.

> lsnrctl stop

> lsnrctl start

Step # 8

Now shutdown the primary database

SQL> Shutdown Immediate;

Create spFile from pFile.

SQL> Create spfile from pfile;

Restart the Primary database.

Step # 9

Now startup the STANDBY database to mount stage.

SQL> Startup NoMount;

SQL> Alter Database Mount Standby Database;

Enable Force logging.

SQL> Alter Database Force Logging;

Step # 10

Query the DATABASE_ROLE column from V$DATABASE to new the role of Standby database. It should return ‘PHYSICAL STANDBY’.

SQL> Select Database_role from v$Database;

DATABASE_ROLE

-------------------------

PHYSICAL STANDBY

LOG SHIPPING

On PRIMARY site enable log_archive_dest_state_2 to start shipping archive redo logs.

SQL> Alter system set Log_Archvie_Dest_State_2=ENABLE scope=both;

Check the sequence # and the archiving mode by executing following command.

SQL> Archive Log List;

Then switch the logfile.

SQL> Alter System Switch Logfile;

System Altered.

Now on the primary site check the status of Standby Archiving destination.

SQL> Select Status, Error from v$Archive_dest where dest_id=2;

The STATUS should return – VALID. If it return Error, then check the connectivity between the Primary and standby machines.

START PHYSICAL LOG APPLY SERVICE

On the STANDBY database execute the following command to start Managed Recover Process (MRP). This command is executed on mount stage.

SQL> Shutdown Immediate;

SQL> Startup Mount;

SQL> Alter Database Recover Managed Standby Database;

Database Altered.

By executing the above command the current session will become hanged because MRP is a foreground recovery process. It waits for the logs to come and apply them. To avoid this hanging, you can execute the following command with DISCONNECT option.

SQL> Alter Database Recover Managed Standby Database Disconnect;

Now the session will be available to you and MRP will work as a background process and apply the redo logs.

You can check whether the log is applied or not by querying v$archived_log

SQL> Select Name, Applied, Archived from v$Archived_Log;

This query will return the name of archived files and their status of being archived and applied.

Oracle 11g Data Guard Configuration Done.

Shailesh Gudimalla Oracle Apps DBA All Posting is my own workshop example,if you're using this then please check first in your test environment.

Tuesday, June 21, 2011

Proper Steps to Uninstall Oracle Database in Window XP

2 START è PROGRAMS è ORACLE è OraDb10g_home1 è Oracle Installation Products è Universal Installer. Uninstall all Oracle products, but Universal Installer itself can not be deleted.

3 START è RUN. Type regedit and then press enter, when window open choose HKEY_LOCAL_MACHINE \ SOFTWARE \ ORACLE and press the del key to delete the entry;

4 START è RUN. Type regedit and then press enter, when window open choose HKEY_LOCAL_MACHINE \ SYSTEM \ CurrentControlSet \ Services, scroll through the list, remove all Oracle entrance.

5 START è RUN. Type regedit and then press enter, when window open, select the HKEY_LOCAL_MACHINE \ SYSTEM \ CurrentControlSet \ Services \ Eventlog \ Application, remove all Oracle entrance.

6 START è Control Panel è System è Advanced è Environment Variables. Delete the environment variable CLASSPATH and PATH settings relating to Oracle.

7 START è ALL PROGRAMS menu, delete all the Oracle of the group and icons.

8 Delete the C:\ Program Files \ Oracle directory.

9 Restart the computer after the restart to completely remove the Oracle directory.

10 Delete the Oracle-related files, select the default directory where Oracle C: \ Oracle, delete this entry directory and all subdirectories, and from the Windows XP directory (usually d: \ WINDOWS) Delete the following files ORACLE.INI , oradim73.INI, oradim80.INI, oraodbc.ini etc.

11 WIN.INI file if [ORACLE] section of the tag, delete the paragraph.

12 If necessary, remove all Oracle related ODBC-DSN;

13 To the event viewer, delete the Oracle-related logs. If you can not remove individual DLL files

the case, then it does not matter.

Monday, June 20, 2011

Oracle 11g Release 2, New Features for DBA

1) Cluster can be seen everywhere

In previous versions, Oracle Clusterware must be installed independently in its own in the ORACLE HOME, and can only be used in the RAC environment, all in the Oracle 11g R2 thoroughly subversive, because in this version support the installation of Oracle Net grid infrastructure, and only need a separate ORACLE HOME, which includes the Oracle Clusterware and Oracle Automatic Storage Management (ASM). Through the upgraded Oracle Universal Installer is installed on a grid infrastructure.

Single-instance RAC (Oracle restart):- Oracle 11g R2 extends the Oracle Clusterware features provided for any single instance high availability features, is essentially a single-instance database into a RAC database. Oracle 11g R2 features to help restart the Oracle Grid Infrastructure Oracle high availability service control server, which a listener when you restart, ASM instance and database should be started, it completely replaced the previous DBA often used DBSTART script. Similarly, when a single database instance crashes or other abnormal termination, Oracle restart feature will automatically monitor the instances of failure and automatically restart it, just like in a real environment, the same as RAC.

SRVCTL Upgrade:- If you manage an old version of the RAC environment, you may already be familiar with the RAC environment maintenance tools SRVCTL, in the 11g R2, this tool has been expanded, and now can manage single-instance RAC, as well as the listener and the ASM instance .

Cluster time synchronization services:- Oracle 11g R2 on all RAC nodes now need to configure the time synchronization, if you have the experience of being expelled from a node RAC cluster configuration, you must know how much more difficult, especially the two server's time is not synchronization and time stamp log files is not synchronized, Oracle versions prior to using the system provides Network Time Protocol (NTP) synchronization of all nodes in time, but need to connect to the Internet standard time servers, NTP, as a replacement for, Oracle 11g R2 provides a new cluster time synchronization services to ensure that all nodes in the cluster of the time consistent.

Grid Plug and Play:- In previous versions, configure the most complex part of the RAC is to determine and set all the nodes need to use a public ip address, private ip address and virtual ip address. To simplify the RAC installation, Oracle 11g R2 provides a new grid Name Service (GNS), and domain name servers which collaborate to deal with each grid component ip address allocation, when the cluster environment across multiple databases in this new features extremely useful.

Cleanly uninstall the RAC components: If you've ever tried to delete multiple nodes all RAC traces, it would certainly love to this new feature, the Oracle 11g R2, all installation and configuration assistants, in particular, Oracle Universal Installer (OUI), Database Configuration Assistant (DBCA) and Network Configuration Assistant (NETCA), have been enhanced, when the need to uninstall the RAC components, we can ensure that dumping was clean.

2) ASM joined the cluster

Oracle 11g R2 ASM filled with a number of attractive new features, for beginners, ASM, and Oracle 11g R2 Clusterware is installed on the same Oracle Home, it is therefore recommended before eliminating redundant Oracle Home installation method, and from ASM DBCA was spun off, and have a dedicated Automatic Storage Management Configuration Assistant (ASMCA). The most interesting new features of ASM are:-

Intelligent data layout:- In the previous version, you want to configure ASM disk storage administrators may be required to participate, you need to configure the disk I / O subsystem, Oracle 11g R2 provides the ASM allocation unit, you can directly benefit from the disk outer edge of the cylinder get faster, can be data files, redo log files and control on the outer edge of the disk for better performance.

EM Support Workbench Extension:- In this version of the Oracle 11g R2 introduced into the enterprise management console, the automatic diagnostic repository (ADR) was expanded to include support for ASM diagnostics, package all diagnostic information directly to Oracle technical support in order to obtain ASM performance problems faster solution.

ASMCMD Enhanced:- Automatic Storage Management command-line utility (ASMCMD) also received a number of enhancements, including:-

1) Start and stop the ASM instance.

2) Backup, restore and maintain the ASM instance server parameter file (spfile).

3) The utility iostat monitor ASM disk group performance.

4) The maintenance of the new ASM cluster file system (ACFS) in the disk volume, directory and file storage.

3) ACFS - A robust cluster file systems

Oracle also released before the off cluster file system (OCFS), later released an enhanced version of OCFS2, it allows Oracle RAC instances can share the memory read and write database files, redo log files and control files.

In addition, OCFS also allows the RAC database, Oracle Cluster registration documents (OCR) and voting disk is stored in the cluster file system, in the Oracle 10g R2, this demand has been canceled, OCR and voting disk files can be stored in the bare device or bare block device, if you have the original equipment is lost all copies of these documents, you must understand how to restore them cumbersome and, therefore, in the Oracle 11g R2, you will no longer support these files are stored on the bare device.

In order to improve the viability of these critical files, Oracle 11g R2 formally introduced a new cluster file system, called the ASM cluster file system (ACFS), in the RAC environment, ACFS documents for OCR and voting disk to provide better protection, which allows OCR to create five copies, before the cluster file system allows only two OCR save the file, a primary OCR, a mirrored OCR, but the ACFS not suitable for a separate RAC environment, in addition, almost all the operating system and data-related documents are available from ACFS's security and file sharing features to benefit.

Dynamic Volume Manager:- Oracle 11g R2 provides a new ASM Dynamic Volume Manager (ADVM) to configure and maintain stored in the ACFS file system files, using ASM disk group ADVM can build a ADVM volume within the device, manage Store the ADVM volume device files, as well as on-demand adjustment ADVM volume device space, the most important, because ADVM is built on the ASM file system architecture, and can ensure the volume stored in these files are well protected, does not appear accidental loss, because of the ASM provides a similar RAID disk array functions.

File access control:- the use of traditional Windows-style access control list (ACL) or Unix / Linux under the user / group / other access to the style ACFS directory and file to give read, write, and execute permissions, you can graphically oriented enterprise management and the command-line program ASMCMD management ACFS directory and file security.

The file system snapshots (FSS):- Oracle 11g R2, through its file system snapshots (FSS) function can be the implementation of ACFS file system snapshot, a snapshot is selected ACFS a read-only copy of the file system on the same ACFS It will automatically keep a snapshot of 63 independent ACFS, when a file is inadvertently ACFS update, delete, or other hazardous operations, this feature is very useful, using 11g R2 Enterprise Manager console or ACFS acfsutil command-line tool can find out the file and execute the appropriate version of the resume.

4) Improved Software Installation and Patching Process

Cluster Verify Utility Integration:- From Oracle 10g introduced the cluster verification utility (CVU), has now been fully integrated into the Oracle Universal Installer (OUI), and other configuration assistants (for example, DBCA).

Zero downtime patch cluster:- When the patch for the Oracle cluster, when, Oracle 11g R2 in an inappropriate place apply the patch to upgrade, which means that there will be two Oracle Home, in which a patch designed to store, but the time only can activate an Oracle Home, in the Oracle 11g R2 upgrade in no longer close down all Oracle cluster, and to achieve true zero downtime patch.

5) DBMS_SCHEDULER Upgrade

Ancient DBMS_SCHEDULER package has been thoroughly updated, DBA often use this package to schedule jobs.

File Monitor:- previous versions can not be detected in the batch process the majority of trigger events, such as the detection of a file arrived in a directory, in the Oracle 11g R2, the use of the new file monitor can ease this problem, once the document is expected to arrive in directory, DBMS_SCHEDULER can now be detected, and the new object type SCHEDULER_FILEWATCHER_RESULT register its arrival, its adoption of the new CREATE_FILE_WATCHER stored procedure to send a signal to trigger the operation DBMS_SCHEDULER.

Built-in email notifications: Whenever, DBMS_SCHEDULER scheduled tasks to start, failed, or completed, the task state can be immediately sent via email, although in previous versions can also achieve this functionality, but either call DBMS_MAIL stored procedure, or Call DBMS_SMTP stored procedure, and now this feature into DBMS_SCHEDULER in the.

Remote Operation:- DBMS_SCHEDULER now allows DBA to create the remote database and scheduling jobs.

Multi-operating objectives:- Finally, can now be multiple database instances at the same time scheduling DBMS_SCHEDULER the task, and in the RAC environment, this feature is very useful because I can use multiple database instances will be long-running task is divided into several parts, in different the database instance to perform smaller tasks.

Oracle Database 11gR2 Grid Infrastructure and Automatic Storage Management for a Standalone Server (Part-1)

Requirements for Oracle Grid Infrastructure Installation

1) Memory Requirement

At least 1 GB of RAM

2) Disk Space Requirement

At least 3 GB of disk space

At least 1 GB of disk space in the /tmp directory

3) Software Requirement

Linux_11gR2_grid

4) ASMLib Software Requirement

oracleasm-support-2.1.3-1.el5.i386.rpm

oracleasmlib-2.0.4-1.el5.i386.rpm

oracleasm-2.6.18-164.el5-2.0.5-1.el5.i686.rpm

These rpm packages are available on OEL 5.4 DVD.

5) Extra Disk Requirements

There are unformatted disk partitions are required to mark as ASM disks and form into an ASM diskgroup. In my case these drives are named /dev/sda, /dev/sdb and dev/sdc.

Step 1

Login from Root user

Step 2

Create the accounts and groups

#groupadd -g 501 oinstall

#groupadd -g 502 dba

#groupadd -g 503 oper

#groupadd -g 504 asmadmin

#groupadd -g 505 asmoper

#groupadd -g 506 asmdba

#useradd -g oinstall -G dba,asmdba,oper oracle

#useradd -g oinstall -G asmadmin,asmdba,asmoper,oper,dba grid

#groupadd -g 502 dba

#groupadd -g 503 oper

#groupadd -g 504 asmadmin

#groupadd -g 505 asmoper

#groupadd -g 506 asmdba

#useradd -g oinstall -G dba,asmdba,oper oracle

#useradd -g oinstall -G asmadmin,asmdba,asmoper,oper,dba grid

Step 3

Create directories in /opt directory

#mkdir -p /opt/oracle

Set Ownership, Group and mod of these directories

#chown -R grid:oinstall /opt/grid

#chown -R oracle:oinstall /opt/oracle

#chown -R oracle:oinstall /opt/oracle

#chmod -R 775 /opt/grid

#chmod -R 775 /opt/oracle

Step 4

Set password for grid and oracle users

#passwd grid

#passwd oracle

Step 5

Setting System Parameters

i) Edit the /etc/security/limits.conf file and add following lines:

grid soft nproc 2047

grid hard nproc 16384

grid soft nofile 1024

grid hard nofile 65536

oracle soft nproc 2047

oracle hard nproc 16384

oracle soft nofile 1024

oracle hard nofile 65536

grid hard nproc 16384

grid soft nofile 1024

grid hard nofile 65536

oracle soft nproc 2047

oracle hard nproc 16384

oracle soft nofile 1024

oracle hard nofile 65536

ii) Edit the /etc/pam.d/login file and add following line:

session required pam_limits.so

iii) Edit the /etc/sysctl.conf and add following lines:

| fs.aio-max-nr = 1048576 fs.file-max = 6815744 kernel.shmmni = 4096 kernel.sem = 250 32000 100 128 net.ipv4.ip_local_port_range = 9000 65500 net.core.rmem_default = 262144 net.core.rmem_max = 4194304 net.core.wmem_default = 262144 net.core.wmem_max = 1048586 |

You need reboot system or execute "sysctl -p" command to apply above settings

Step 6

Step 6

i) Setting Grid User Enviroment

Edit the /home/grid/.bash_profile file and add following lines

TMP=/tmp; export TMP

TMPDIR=$TMP; export TMPDIR

ORACLE_HOSTNAME=ws1; export ORACLE_HOSTNAME

ORACLE_SID=+ASM; export ORACLE_SID

ORACLE_BASE=/opt/grid; export ORACLE_BASE

ORACLE_HOME=$ORACLE_BASE/product/Grid11gR2; export ORACLE_HOME

PATH=$ORACLE_HOME/bin:$PATH; export PATH

if [ $USER = "oracle" ] || [ $USER = "grid" ]; then

if [ $SHELL = "/bin/ksh" ]; then

ulimit -p 16384

ulimit -n 65536

else

ulimit -u 16384 -n 65536

fi

umask 022

fi

TMPDIR=$TMP; export TMPDIR

ORACLE_HOSTNAME=ws1; export ORACLE_HOSTNAME

ORACLE_SID=+ASM; export ORACLE_SID

ORACLE_BASE=/opt/grid; export ORACLE_BASE

ORACLE_HOME=$ORACLE_BASE/product/Grid11gR2; export ORACLE_HOME

PATH=$ORACLE_HOME/bin:$PATH; export PATH

if [ $USER = "oracle" ] || [ $USER = "grid" ]; then

if [ $SHELL = "/bin/ksh" ]; then

ulimit -p 16384

ulimit -n 65536

else

ulimit -u 16384 -n 65536

fi

umask 022

fi

ii) Setting Oracle User Enviroment

Edit the /home/oracle/.bash_profile file and add following lines

TMP=/tmp; export TMP

TMPDIR=$TMP; export TMPDIR

ORACLE_HOSTNAME=ws1; export ORACLE_HOSTNAME

ORACLE_BASE=/opt/oracle; export ORACLE_BASE

ORACLE_HOME=$ORACLE_BASE/OraDB11gR2; export ORACLE_HOME

ORACLE_SID=ORCL; export ORACLE_SID

ORACLE_TERM=xterm; export ORACLE_TERM

PATH=/usr/sbin:$PATH; export PATH

PATH=$ORACLE_HOME/bin:$PATH; export PATH

LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib; export LD_LIBRARY_PATH

CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib; export CLASSPATH

if [ $USER = "oracle" ] || [ $USER = "grid" ]; then

if [ $SHELL = "/bin/ksh" ]; then

ulimit -p 16384

ulimit -n 65536

else

ulimit -u 16384 -n 65536

fi

umask 022

fi

TMPDIR=$TMP; export TMPDIR

ORACLE_HOSTNAME=ws1; export ORACLE_HOSTNAME

ORACLE_BASE=/opt/oracle; export ORACLE_BASE

ORACLE_HOME=$ORACLE_BASE/OraDB11gR2; export ORACLE_HOME

ORACLE_SID=ORCL; export ORACLE_SID

ORACLE_TERM=xterm; export ORACLE_TERM

PATH=/usr/sbin:$PATH; export PATH

PATH=$ORACLE_HOME/bin:$PATH; export PATH

LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib; export LD_LIBRARY_PATH

CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib; export CLASSPATH

if [ $USER = "oracle" ] || [ $USER = "grid" ]; then

if [ $SHELL = "/bin/ksh" ]; then

ulimit -p 16384

ulimit -n 65536

else

ulimit -u 16384 -n 65536

fi

umask 022

fi

Step 7

Some additional packages are required for successful instalation of Oracle Grid Infrastructure software. To check wheter required packages are installed on your operating system use following command:

# rpm -q binutils elfutils elfutils-libelf gcc gcc-c++ glibc glibc-common glibc-devel compat-libstdc++-33 cpp make compat-db sysstat libaio libaio-devel unixODBC unixODBC-devel|sort

Step 8

Install required ASMLib softwares

#rpm -ivh oracleasm-support-2.1.3-1.el5.i386.rpm /

oracleasmlib-2.0.4-1.el5.i386.rpm /

oracleasm-2.6.18-164.el5-2.0.5-1.el5.i686.rpm

Step 9

Check the available partitions

#ls -l /dev/sd*

Step 10

Both ASMLib and raw devices require the candidate disks to be partitioned before they can be accessed. The following picture shows the "/dev/sda" disk being partitioned.

The remaining disks ("/dev/sdb" and "/dev/sdc") must be partitioned in the same way.

Step 11

To label the disks for use by ASM, perform the following steps:

i) configure oracleasm by using the command

# oracleasm configure -i

and answer the prompts as shown in the screenshot.

ii) Initialize the asmlib with the oracleasm init command. This command loads the oracleasm module and mounts the oracleasm filesystem.

iii) Use the oracleasm createdisk > command to create the ASM disk label for each disk.

In my casethe disks names are DISK1, DISK2 and DISK3 as shown in the screenshot.

iv) Check that the disk are visible using the oracleasm listdisks command.

vii) Check the the disks are mounted in the oracleasm filesystem with the command

ls -l /dev/oracleasm/disks

Step 12

i) Extract Linux_11R2_grid software into /mnt directory

# unzip /mnt/Linux_11gR2_grid

Change the ownership and group of grid software directory

# chown grid /mnt/grid –R

# chgrp oinstall /mnt/grid –R

Step 13

i) Now login from grid user

ii) Start the installer with the command

./runInstaller

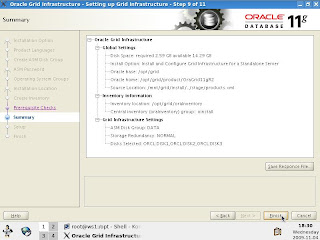

iii) On the first screen of the installer, select Install and Configure Grid Infrastructure for a Standalone Server. Click Next.

iv) On the Select Product Languages screen, select the Languages that should be supported in this installation.

v) On the CreateASMDiskGroup screen,

Set Redundancy to Normal

Select the disks: ORCL:DISK1, ORCL:DISK2, and ORCL:DISK3.

Click Next

vi) Specify ASM Password

vii) Privileged Operating System Groups

viii) Specify Installation Location

ix) Perform Prerequisite Checks

x) Summary

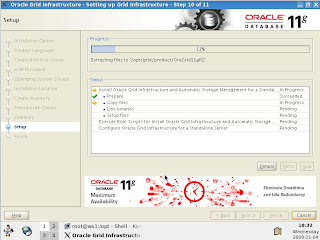

xi) The setup page shows the install progress

xii) The Execute Configuration Scripts page asks you to execute a configuration script as the root user.

xiii) Successfully Install

The Result of the execution of root.sh script

[root@ws1 OraGrid11gR2]# ./root.sh

Running Oracle 11g root.sh script...

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /opt/grid/product/OraGrid11gR2

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root.sh script.

Now product-specific root actions will be performed.

2009-11-04 19:08:14: Checking for super user privileges

2009-11-04 19:08:14: User has super user privileges

2009-11-04 19:08:14: Parsing the host name

Using configuration parameter file: /opt/grid/product/OraGrid11gR2/crs/install/crsconfig_params

Creating trace directory

LOCAL ADD MODE

Creating OCR keys for user 'grid', privgrp 'oinstall'..

Operation successful.

CRS-4664: Node ws1 successfully pinned.

Adding daemon to inittab

CRS-4123: Oracle High Availability Services has been started.

ohasd is starting

ws1 2009/11/04 19:11:01 /opt/grid/product/OraGrid11gR2/cdata/ws1/backup_20091104_191101.olr

Successfully configured Oracle Grid Infrastructure for a Standalone Server

Updating inventory properties for clusterware

Starting Oracle Universal Installer...

Checking swap space: must be greater than 500 MB. Actual 2000 MB Passed

The inventory pointer is located at /etc/oraInst.loc

The inventory is located at /opt/grid/oraInventory

'UpdateNodeList' was successful.

ASM can be used asmca Management

Subscribe to:

Comments (Atom)